Hey @Mark285907,

Thanks for the code snippet, which helps in figuring out what’s going on!

So I think the only way around this is to re-encode the video, that lets ffmpeg actually process the frames and add the text overlay before sending it out over the stream. The catch is that re-encoding is a bit more CPU-heavy, so depending on your setup, it might introduce a bit of lag or a drop in frame rate.

Try removing -c copy and instead tell ffmpeg to re-encode the video so it can overlay the text.

Test you command with this:

ffmpeg_cmd = [

"ffmpeg",

"-fflags", "+genpts",

"-analyzeduration", "10000000",

"-probesize", "5000000",

"-re",

"-f", "h264",

"-i", "-",

"-vf", "drawtext=fontfile=/usr/share/fonts/truetype/dejavu/DejaVuSans-Bold.ttf:"

" text='PiZeroW2 Cam %{localtime\\:%Y-%m-%d %H\\\\:%M\\\\:%S}':"

" fontcolor=white: fontsize=24: box=1: boxcolor=black@0.5:"

" x=10: y=10",

"-c:v", "libx264",

"-preset", "veryfast",

"-f", "rtsp",

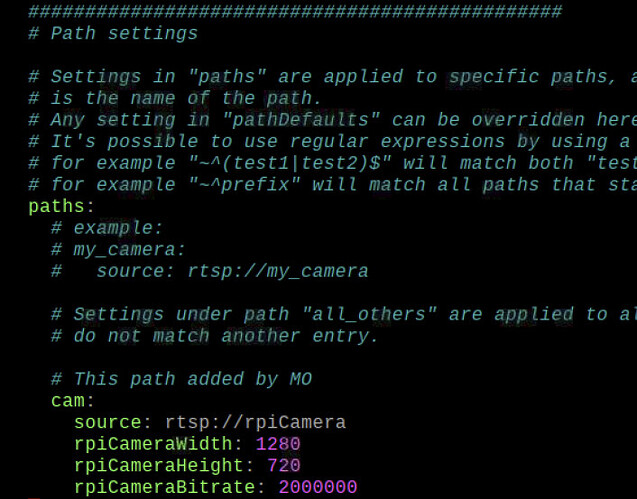

"rtsp://localhost:8554/cam"

]

So pretty similar to what you’ve already got, this command just tells ffmpeg to decode the input, add the overlay, then re-encode it with libx264. The veryfast preset should help keep CPU usage manageable.

Give it a try and see how your setup handles the performance.